(→Overview) |

|||

| Line 1: | Line 1: | ||

| − | {{amd title|Infinity Fabric (IF)}}[[File:amd infinity fabric.svg|right| | + | {{amd title|Infinity Fabric (IF)}}[[File:amd infinity fabric.svg|right|250px]] |

'''Infinity Fabric''' ('''IF''') is a system [[interconnect architecture]] that facilitates data and control transmission accross all linked components. This architecture is utilized by [[AMD]]'s recent microarchitectures for both CPU (i.e., {{amd|Zen|l=arch}}) and graphics (e.g., {{amd|Vega|l=arch}}), and any other additional accelerators they might add in the future. The fabric was first announced and detailed in April 2017 by Mark Papermaster, AMD's SVP and CTO. | '''Infinity Fabric''' ('''IF''') is a system [[interconnect architecture]] that facilitates data and control transmission accross all linked components. This architecture is utilized by [[AMD]]'s recent microarchitectures for both CPU (i.e., {{amd|Zen|l=arch}}) and graphics (e.g., {{amd|Vega|l=arch}}), and any other additional accelerators they might add in the future. The fabric was first announced and detailed in April 2017 by Mark Papermaster, AMD's SVP and CTO. | ||

== Overview == | == Overview == | ||

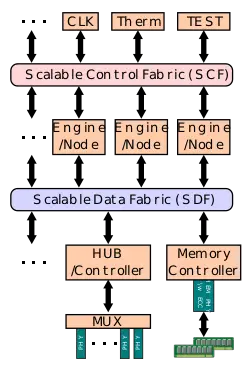

| − | The Infinity Fabric consists of two separate communication planes - Infinity '''Scalable Data Fabric''' ('''SDF''') and the Infinity '''Scalable Control Fabric''' ('''SCF'''). The SDF is the primary means by which data flows around the system between endpoints (e.g. [[NUMA node]]s, [[PHY]]s). The SDF might have dozens of connecting points hooking together things such as [[PCIe]] PHYs, [[memory controller]]s, USB hub, and the various computing and execution units. The SDF is a [[superset]] of what was previously [[HyperTransport]]. The SCF handles the transmission of the many miscellaneous system control signals - this includes things such as thermal and power management, tests, security, and 3rd party IP. With those two planes, AMD can efficiently scale up many of the basic computing blocks. | + | The Infinity Fabric consists of two separate communication planes - Infinity '''Scalable Data Fabric''' ('''SDF''') and the Infinity '''Scalable Control Fabric''' ('''SCF'''). The SDF is the primary means by which data flows around the system between endpoints (e.g. [[NUMA node]]s, [[PHY]]s). The SDF might have dozens of connecting points hooking together things such as [[PCIe]] PHYs, [[memory controller]]s, USB hub, and the various computing and execution units. The SDF is a [[superset]] of what was previously [[HyperTransport]]. The SCF is a complementary plane that handles the transmission of the many miscellaneous system control signals - this includes things such as thermal and power management, tests, security, and 3rd party IP. With those two planes, AMD can efficiently scale up many of the basic computing blocks. |

| − | == | + | == Scalable Data Fabric (SDF) == |

| − | [[File: | + | [[File:amd zeppelin sdf plane block.svg|400px|right]] |

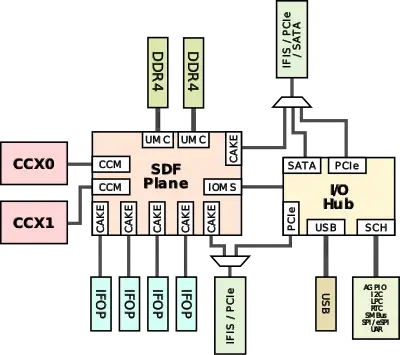

| − | A key feature of the coherent data fabric is that it's not limited to a single die and can extend over multiple dies in an [[MCP]] as well as multiple sockets over PCIe links (possibly even across independent systems, although that's speculation). There's also no constraint on the topology of the nodes connected over the fabric, communication can be done directly node-to-node, island-hopping in a [[bus topology]], or as a [[mesh topology]] system. | + | The Infinity Scalable Data Fabric (SDF) is the data communication plane of the Infinity Fabric. All data from and to the cores and to the other peripherals (e.g. memory controller and I/O hub) are routed through the SDF. A key feature of the coherent data fabric is that it's not limited to a single die and can extend over multiple dies in an [[MCP]] as well as multiple sockets over PCIe links (possibly even across independent systems, although that's speculation). There's also no constraint on the topology of the nodes connected over the fabric, communication can be done directly node-to-node, island-hopping in a [[bus topology]], or as a [[mesh topology]] system. |

| − | + | In the case of AMD's processors based on the {{amd|Zeppelin}} SoC and the {{amd|Zen|Zen core|l=arch}}, the block diagram of the SDF is shown on the right. The two {{amd|CPU Complex|CCX's}} are directly connected to the SDF plane using the '''Cache-Coherent Master''' ('''CCM''') which provides the mechanism for coherent data transports between cores. There is also a single '''I/O Master/Slave''' (IOMS) interface for the I/O Hub communication. The Hub contains two [[PCIe]] controllers, a [[SATA]] controller, the [[USB]] controllers, [[Ethernet]] controller, and the [[southbridge]]. From an operational point of view, the IOMS and the CCMs are actually the only interfaces that are capable of making DRAM requests. | |

| − | |||

| − | + | The DRAM is attached to the DDR4 interface which is attached to the Unified Memory Controller (UMC). There are two Unified Memory Controllers (UMC) for each of the DDR channels which are also directly connected to the SDF. | |

| − | + | == Scalable Control Fabric (SDF) == | |

| − | + | The Infinity Scalable Control Fabric (SCF) is the control communication plane of the Infinity Fabric. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== References == | == References == | ||

Revision as of 12:19, 23 March 2018

Infinity Fabric (IF) is a system interconnect architecture that facilitates data and control transmission accross all linked components. This architecture is utilized by AMD's recent microarchitectures for both CPU (i.e., Zen) and graphics (e.g., Vega), and any other additional accelerators they might add in the future. The fabric was first announced and detailed in April 2017 by Mark Papermaster, AMD's SVP and CTO.

Overview

The Infinity Fabric consists of two separate communication planes - Infinity Scalable Data Fabric (SDF) and the Infinity Scalable Control Fabric (SCF). The SDF is the primary means by which data flows around the system between endpoints (e.g. NUMA nodes, PHYs). The SDF might have dozens of connecting points hooking together things such as PCIe PHYs, memory controllers, USB hub, and the various computing and execution units. The SDF is a superset of what was previously HyperTransport. The SCF is a complementary plane that handles the transmission of the many miscellaneous system control signals - this includes things such as thermal and power management, tests, security, and 3rd party IP. With those two planes, AMD can efficiently scale up many of the basic computing blocks.

Scalable Data Fabric (SDF)

The Infinity Scalable Data Fabric (SDF) is the data communication plane of the Infinity Fabric. All data from and to the cores and to the other peripherals (e.g. memory controller and I/O hub) are routed through the SDF. A key feature of the coherent data fabric is that it's not limited to a single die and can extend over multiple dies in an MCP as well as multiple sockets over PCIe links (possibly even across independent systems, although that's speculation). There's also no constraint on the topology of the nodes connected over the fabric, communication can be done directly node-to-node, island-hopping in a bus topology, or as a mesh topology system.

In the case of AMD's processors based on the Zeppelin SoC and the Zen core, the block diagram of the SDF is shown on the right. The two CCX's are directly connected to the SDF plane using the Cache-Coherent Master (CCM) which provides the mechanism for coherent data transports between cores. There is also a single I/O Master/Slave (IOMS) interface for the I/O Hub communication. The Hub contains two PCIe controllers, a SATA controller, the USB controllers, Ethernet controller, and the southbridge. From an operational point of view, the IOMS and the CCMs are actually the only interfaces that are capable of making DRAM requests.

The DRAM is attached to the DDR4 interface which is attached to the Unified Memory Controller (UMC). There are two Unified Memory Controllers (UMC) for each of the DDR channels which are also directly connected to the SDF.

Scalable Control Fabric (SDF)

The Infinity Scalable Control Fabric (SCF) is the control communication plane of the Infinity Fabric.

References

- AMD Infinity Fabric introduction by Mark Papermaster, April 6, 2017

- AMD EPYC Tech Day, June 20, 2017

- ISSCC 2018